Ready for a smarter way to scrape web data?

Complete the registration now and enjoy 22GB FREE

Complete the registration now and enjoy 22GB FREE

Post pages FAQs

No, Nimble Browser is a fully-managed service with zero infrastructure requirements. This allows you to focus solely on your data collection tasks.

Nimble Browser offers advanced AI technology for bypassing anti-bot systems, native integration with a premium proxy network, and flexible scalability. It ensures clean and accurate data, making it a solid choice for any web scraping needs.

Nimble Browser is fully compatible with existing tools like Puppeteer and Selenium, allowing you to upgrade with just one line of code.

Selenium offers cross-browser support, Puppeteer is designed for Chrome and Chromium, while Nimble Browser provides AI technology, scalability, and accurate data at any scale.

Cost considerations should include not just the initial price but also the value provided, such as speed, reliability, support, and legal compliance. Analyzing these factors in line with your budget and specific needs will guide you to the right choice.

Yes, but risky. Residential proxies offer anonymity but can disconnect your accounts when the IP changes. ISP proxies are safer.

ISP proxies are typically chosen for tasks that require faster speeds and less focus on anonymity, such as data scraping or market research. They might also be more cost-effective for certain use cases.

Nimble’s residential proxies leverage a special AI engine, allowing them to offer higher speeds than most other residential proxy providers, even rivaling ISP proxies. This unique technology ensures both high anonymity and impressive speed.

While free residential proxies may seem appealing, they often come with risks like unreliable performance, lack of support, and potential security concerns. It’s usually recommended to opt for a reputable provider that offers support and ensures safety and reliability.

ISP proxies are hosted by Internet Service Providers, offering faster speeds but less anonymity. Residential proxies use real IP addresses from residential locations, providing higher anonymity but typically at a slower speed.

Automating SEO and SEM monitoring saves businesses time and effort, reduces the risk of human error, and provides more accurate and timely insights. This enables businesses to make more informed decisions about their SEO and SEM strategies, leading to better search engine rankings and increased website traffic.

For further queries, feel free to contact us.

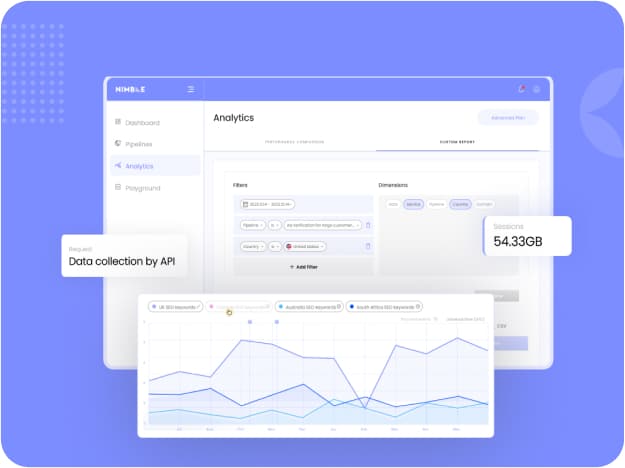

A: The Nimble Data Platform is a powerful tool for web data gathering. It’s made up of three layers:

- Nimble APIs for end-to-end, automated data collection.

- Nimble Browser for overcoming anti-bot obstacles

- Nimble IP for AI-optimized premium proxies

AI, such as Nimble’s built-in data structuring AI engine, can understand the structure and content of web pages and automate the extraction of key data points, such as SERP ranking and keywords. Nimble’s AI structuring engine can also react to changes in webpage structures in real-time, adapting to the new format and maintaining consistent and smooth data extraction.

SEO stands for Search Engine Optimization and SEM stands for Search Engine Marketing. Both are strategies to improve a business’s online presence and drive more traffic to their websites.

Bypassing an IP ban could be considered unethical, especially if you’re breaking terms of service or engaging in illegal activities. Limit your web scraping activities to public data only, avoid scraping data after a login screen.

Yes, it’s possible to be re-banned if you engage in the same behavior that led to the original ban, or if the service detects that you’re using a method to bypass restrictions.

Some methods, like VPNs or low-quality proxies, can affect internet speed. However, reputable proxy providers usually offer high-speed connections.

The legality of circumventing an IP ban varies by jurisdiction and the terms of service of the website you’re accessing. Always consult local laws and website policies.

Web scraping is used in various fields like market research, data analysis, and lead generation. It’s also common in sectors like real estate, finance, and e-commerce for gathering data.

Not necessarily. There are pre-built web scraping tools that require no coding. But if you need customized data or want more control, some coding knowledge is helpful.

The legality of web scraping is a grey area. It’s mostly okay if you’re scraping public data and not overloading servers. Always check a website’s terms of service to be sure.

Scrapy has built-in features like AutoThrottling for rate-limiting. Beautiful Soup doesn’t offer this natively, so you’ll have to manually implement delays or use it alongside other tools that can handle rate-limiting.

Not out-of-the-box. Scrapy doesn’t handle JavaScript, so you’ll need additional solutions like Selenium or Puppeteer to scrape dynamic websites.

Yes, Beautiful Soup is primarily a parsing library, so you’ll need other tools like requests for fetching web pages. It is also recommended to use proxies to avoid blockages.

Beautiful Soup is generally easier for beginners due to its simpler setup and user-friendly interface. Scrapy, while powerful, has a steeper learning curve.

Yes, you can combine Scrapy’s crawling abilities with Beautiful Soup’s parsing features for a more flexible and powerful web scraping solution.

It ensures entities remain updated with real-time conversations and trends.

They can skew the visibility and accessibility of certain data types, making comprehensive data collection challenging.

It necessitates advanced tools for seamless extraction and meaningful interpretation.

It provides instantaneous insights into public sentiment, emerging trends, and competitor strategies.

Continuous access ensures businesses get consistent and up-to-date insights.

Websites displaying region-specific content can make global data collection challenging.

It requires sophisticated tools for efficient extraction and interpretation.

It offers immediate insights, allowing businesses to respond to swift market changes.

It’s the practice of extracting data from websites for analysis and insights.

You can manage cookies manually or use specialized tools. If you want to avoid the technical details, Nimble’s web scraping tools can handle the whole process for you.

Cookies themselves aren’t harmful, but they can store sensitive information. It’s important to manage your cookies well and use secure settings to protect your data.

Cookies can both help and hinder web scraping. They can be used to maintain a session and collect data more accurately, but they can also be a red flag that leads to your scraper getting blocked.

HTTP cookies are small text files that websites store on your device. They help websites remember things about you, like if you’re logged in or what’s in your shopping cart.

The duration can vary. It might be a few minutes or several hours. If the suggested fixes don’t work, you may need to wait it out, as it’s typically a temporary block.

Yes, proxies can help by diversifying your IP address, making it harder for Instagram to detect and flag your activity as suspicious.

Not necessarily. You should review your automation settings to ensure they comply with Instagram’s guidelines. If the error persists, consider reducing the use of these tools.

To fix this error, try changing your network connection, clearing your app’s cache, or reinstalling Instagram. Also, reduce any rapid or repetitive activity that could be seen as bot-like.

This error usually means Instagram’s system has flagged your account for unusual activity that resembles automated behavior, like using a bot.

Built on the Nimble Browser, the API uses AI-driven fingerprinting technology to navigate anti-bot measures on map platforms, ensuring consistent and reliable access.

Yes, the API supports batch processing, allowing for data collection across large regions or for multiple business categories at once.

Maps data offers insights into business operations, consumer sentiments, and market dynamics, making it essential for informed decision-making.

It’s an advanced data API that allows businesses to extract comprehensive data from public map listings, including business details and user reviews.

For better readability of JSON data, use the indent parameter in the json.dumps() method which pretty-prints the output.

Yes, Python objects can be converted back to JSON format using the json.dumps() method for strings or the json.dump() method when writing to files.

To handle JSON parsing errors, you can use try-except blocks to catch exceptions like JSONDecodeError and handle them appropriately.

No, Python comes with a built-in json module that provides all the necessary functions to parse JSON data.

Yes, with Python, you can retrieve and parse JSON data directly from a website using libraries such as requests for fetching the data and json for parsing.

JSON is a preferred format for web scraping due to its lightweight nature and ease of use for both human understanding and machine processing, facilitating quick data exchange.

JSON parsing in Python is the process of converting JSON formatted data into a Python-readable form such as dictionaries or lists with the help of Python’s built-in json module.

Nimble provides streamlined web data-gathering services with advanced parsing capabilities, ensuring efficient and accurate data extraction for businesses.

The choice depends on specific business needs, technical capabilities, and budget considerations. Each option has its pros and cons.

Common formats include XML (eXtensible Markup Language), JSON (JavaScript Object Notation), and CSV (Comma-Separated Values).

Data parsing is crucial for businesses as it allows for efficient data utilization, aiding in informed decision-making and process automation.

Data parsing is the process of converting data from one format to another, making it usable in various applications.

A: Nimble’s proxy solutions are engineered for high performance and reliability, reducing your chances of encountering different proxy error codes. Plus, our top-notch support team is always ready to help you, making your experience as smooth as possible.

A: A proxy server can offer a level of anonymity and may come with security features like SSL encryption. However, not all proxies provide the same level of security. Always choose a reputable provider to ensure safety.

A: Yes, a 502 error can be linked to your proxy server. It usually means the server acting as a gateway couldn’t get a valid response. To solve this, try restarting your proxy IP or checking its settings.

A: This error pops up when your proxy server needs you to log in. Make sure you enter the right username and password. If you’re still stuck, contact your proxy provider for more help.

A: Common proxy errors include 400 Bad Request, 407 Proxy Authentication Required, and 502 Bad Gateway. Each has its own fixes—like checking the URL for 400 or entering correct credentials for 407. It usually comes down to verifying your settings and refreshing your connection.