How to Parse JSON in Python for Web Scraping with Nimble's API

JSON is one of the most popular data formats. Learn how to parse JSON in Python so you can make the most of the data you scrape.

In today’s digital landscape, JSON (JavaScript Object Notation) has emerged as a foundational data format, critical for web scraping and API data interchange.

JSON’s simplicity, paired with Python’s built-in json module, makes data parsing both powerful and accessible for developers, data analysts, and technical enthusiasts. Whether you're just getting started or refining your scraping techniques, this guide will show you how to efficiently parse JSON data in Python for web scraping projects, with a special focus on leveraging Nimble's API for optimal results.

Let’s get started!

What is JSON?

JSON, or JavaScript Object Notation, is a text-based format that is widely used for transmitting and storing structured data over the web. Its power lies in its simplicity, lightweight nature, universal compatibility, and its easy-to-read structure. JSON has the unique ability to transmit data across many different platforms and programming languages while maintaining a structure that can be read by both humans and machines.

Because of this universality, JSON is considered a cornerstone of web technology, and is used in everything from web APIs to configuration files.

What Is JSON Parsing?

JSON parsing is the process of converting JSON-formatted data into a programming language's native data structures. This is done so the data can be directly manipulated in a program, making it easy to be utilized and integrated into applications and workflows.

The Role of JSON in Web Scraping

JSON is popular for web scraping, powering API responses, and dynamic data manipulation because of its versatility and readability. Its structured, machine-readable format powers API responses and dynamic data exchanges across countless applications, while its intuitive structure makes it easy for developers to debug and manipulate the data.

JSON is also highly scalable, especially when combined with Python’s ecosystem. Together, the pairing allows for seamless handling of both small datasets and massive, real-time data streams from multiple endpoints.

Why Does JSON Go Well With Python?

JSON represents data in key-value pairs, arrays, and nested structures, making it highly compatible with Python, which uses nearly identical native data representation formats. To break this down further:

- JSON’s key-value pairs map directly to the key-value pairs used in Python dictionaries.

- JSON arrays translate seamlessly into Python lists.

- Nested objects can be parsed into other dictionaries or lists, maintaining the hierarchy of the original JSON structure.

This compatibility eliminates the need for extensive transformations when working with JSON data, enabling Python developers to integrate JSON easily into their workflows. Python's standard library even includes the json module, making it simple to parse and generate JSON without installing third-party packages.

Why Does JSON Work Well With APIs?

JSON’s ease-of-use and universally accepted structure are important for APIs because APIs are responsible for efficient data exchange between clients and servers. Some of these clients and servers have incompatible architectures and programming languages, making JSON essential for proper communication.

Because of this cross-platform compatibility, JSON has amassed widespread adoption in API development. Nearly every major API, including those from platforms like Twitter, Google, and Facebook, uses JSON to send data between clients and servers.

Step 1: Installing Python for JSON Parsing

To begin working with JSON in Python, you need to make sure that Python is properly installed. Follow these steps for different operating systems:

Windows Installation:

- Visit the official Python website and download the latest version.

- Run the installer and be sure to check the box that says "Add Python 3.13 to PATH" (or whatever the latest version is at the time you’re doing this) to ensure you’ll be able to run Python commands directly from your command line.

- After installation, confirm by running

python --versionin your command prompt.

MacOS Installation:

- Install Homebrew, a popular package manager for macOS.

- Run

brew install pythonin the terminal to install the latest Python version. - Verify the installation by typing

python3 --version.

Alternatively, you can also visit the official Python website and follow the instructions it provides.

Linux Installation:

- Python typically comes pre-installed. You can check this by running

python3 --version. - If it’s not installed, install it using the package manager specific to your distribution. For example, on Ubuntu, run

sudo apt-get install python3.

Post-Installation:

- Verifying Pip: Pip is Python’s package installer and should be included by default with Python versions 3.4 and above. Verify its installation by typing

pip --versionorpip3 --versionin the command prompt or Terminal. - Setting Up a Virtual Environment: After installing Python, create a virtual environment for your project. This can be done by running

python3 -m venv /path/to/new/virtual/environmenton macOS and Linux, orpython -m venv \path\to\new\virtual\environmenton Windows. - Activating the Virtual Environment: Before you start working on your project, activate the virtual environment by running source

/path/to/new/virtual/environment/bin/activateon macOS and Linux, or\path\to\new\virtual\environment\Scripts\activateon Windows.

By following these steps, you'll have a working Python installation on your computer, ready to tackle JSON parsing and other Python projects.

Step 2: Deserializing JSON with Python

Deserialization means converting JSON into a Python object. It's an essential process when dealing with data collected through various means, including proxy services that ensure the reliability of your data scraping efforts.

Import the JSON Module

To start working with JSON in Python, you need to bring the json module into your script. It's like unlocking the door to Python's toolkit for JSON data. Here's how you do it:

import jsonimport json

This simple line of code is powerful—it gives you immediate access to functions for reading JSON data into Python, as well as outputting Python objects to JSON format. With json imported, you're all set to decode and encode JSON, transforming strings to data structures and vice versa, effortlessly.

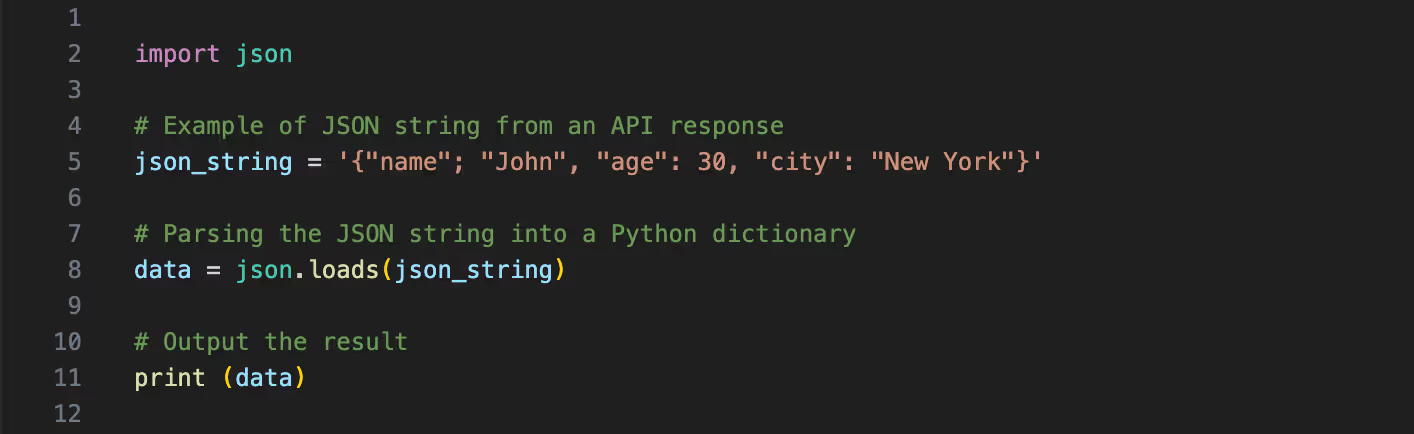

Step 3: How to Parse JSON Strings in Python

Once Python is installed, you can begin parsing JSON. For web scraping, JSON is often retrieved from APIs as a string. Let’s explore how to parse this string into a Python dictionary using Python's json module.

import json

# Example of JSON string from an API response

json_string = '{"name"; "John", "age": 30, "city": "New York"}'

# Parsing the JSON string into a Python dictionary

data = json.loads(json_string)

# Output the result

print (data)In this example, json.loads() is used to convert the JSON string into a Python dictionary. This conversion is critical for web scraping since it transforms the unstructured API response into a format you can work with programmatically.

Step 3: Fetching JSON Data (Using Nimble’s Web API)

Of course, in order to parse JSON in Python, you first need to get data in the JSON format.

While you could build your own web scraping infrastructure from scratch to do this, Nimble’s web API offers a simple, streamlined approach to access public web data and convert it into the JSON format so that it can be used for new applications. The API can gather large amounts of accurate data, even in the face of challenges like rate limits, access restrictions and bot protections.

Let’s take a more detailed look at how to interact with Nimble’s API.

How to Use Nimble’s Web API to Gather Public Web Data

Nimble’s Web API can gather web data from any public web source, whether it be SERPs (search engine result pages), e-commerce websites, maps like Google or Apple Maps, or social media. Data can be gathered in real-time or asynchronously, and requests are scalable depending on the depth of your data gathering needs.

You can sign up for a free trial of the API here, and learn more about how to use it in our quick start guide.

Parsing Raw HTML Into JSON

Nimble’s Web API makes transforming raw HTML data into structured JSON format easy. Our parsing templates allow you to define which data you want to extract based on your specific needs, while our AI-powered parsing skills automate much of the process for you. The result is accurate, structured JSON data taken from raw web data that can then be further refined using Python parsing.

To learn how to set up JSON parsing in Nimble, read our Web API data parsing guide.

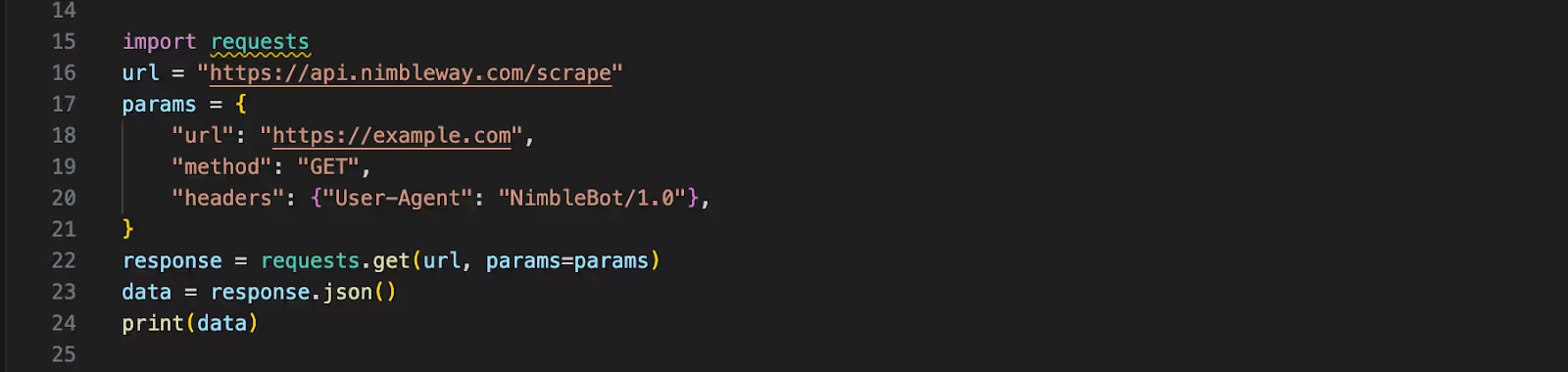

Example API Call

When using Nimble’s API with parsing skills turned on, structured JSON will be returned as the default response format. Here’s a simple example of how to use Python to retrieve and parse JSON data from an API, utilizing libraries like requests.

import requests

url = "https://api.nimbleway.com/scrape"

params = {

"url": "https://example.com",

"method": "GET",

"headers": {"User-Agent": "NimbleBot/1.0"},

}

response = requests.get(url, params=params)

data = response.json()

print(data)Here, we’re making a request to scrape a specific page while passing the necessary headers to simulate human-like behavior and avoid detection. Nimble also handles common web scraping challenges like:

- Rate Limiting: Many websites restrict the number of requests allowed in a given time frame. Nimble automatically manages request throttling to prevent getting blocked.

- Identifier bypassing: Nimble’s proxy solutions include residential proxies that handle CAPTCHAs and other identifier blocks without requiring manual input, making scraping seamless.

With this API setup, you’re able to scale your scraping operations without worrying about site defenses.

Step 4: Handling JSON Files in Python

In many web scraping workflows, you’ll often save data as JSON files. Here’s how Python simplifies reading from and writing to JSON files.

Reading from a JSON File

If you've saved JSON data from a scraping session, you can load it into your Python program for further analysis:

with open('data.json', 'r') as file:

data = json.load(file)

print(data)

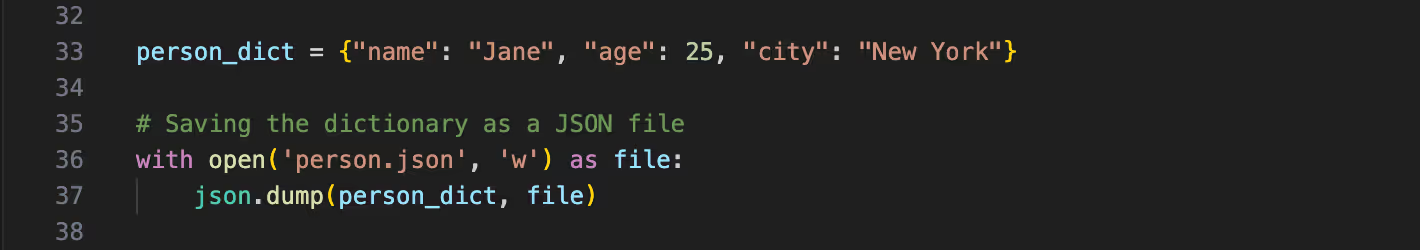

Writing JSON to a File

Once you’ve scraped and processed data, saving it in JSON format is straightforward:

person_dict = {"name": "Jane", "age": 25, "city": "New York"}

# Saving the dictionary as a JSON file

with open('person.json', 'w') as file:

json.dump(person_dict, file)

This method writes the dictionary as a JSON file, which can be useful for exporting and sharing data collected through scraping.

Step 5: Advanced Techniques, Tips, and Tricks

Sometimes, JSON parsing with Python will require extra techniques, especially if you’re working with large or complex datasets. Here are some additional tips on ensuring readability, organization, and error-free parsing.

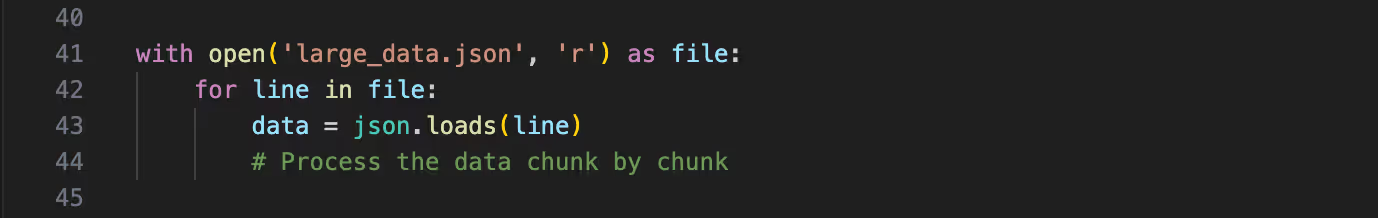

Working with Large JSON Files

For web scraping projects, JSON responses can become large, especially when dealing with rich datasets or paginated results from APIs. In such cases, loading the entire JSON at once into memory could cause performance bottlenecks. Instead, you can use Python’s json library to handle the data incrementally. For instance, using json.load() to process large files piece by piece:

with open('large_data.json', 'r') as file:

for line in file:

data = json.loads(line)

# Process the data chunk by chunkAlternatively, using third-party libraries like ujson or rapidjson can significantly speed up parsing times, especially when working with massive JSON datasets. Both libraries provide faster alternatives to Python’s native json module and are optimized for performance in high-throughput environments.

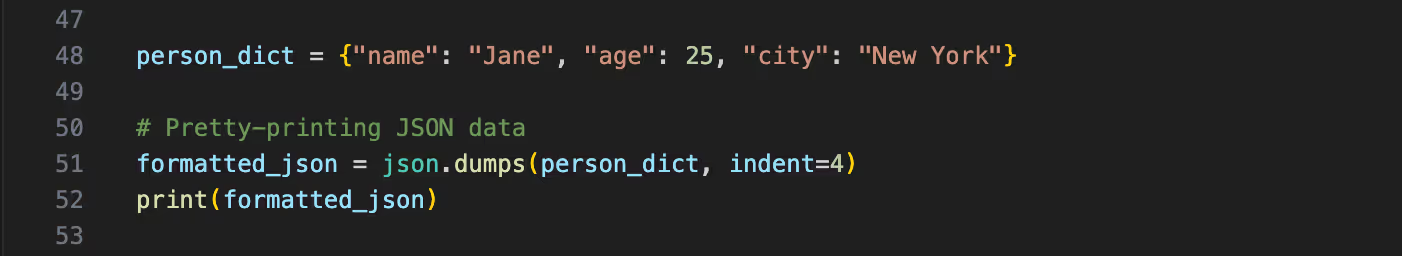

Formatting JSON for Readability

When you need to review JSON data, readability is crucial. Python’s json module makes it easy to format JSON data for better clarity, especially when dealing with large or complex data structures.

person_dict = {"name": "Jane", "age": 25, "city": "New York"}

# Pretty-printing JSON data

formatted_json = json.dumps(person_dict, indent=4)

print(formatted_json)This adds indentation, making the JSON output easier to read. Such formatting is especially useful when reviewing API responses or preparing data for reports.

Handling JSON Parsing Errors in Python

When working with JSON data—especially from unpredictable sources like web scraping—errors can occur. Python provides robust error handling to ensure your scraper doesn’t crash when facing invalid data.

try:

data = json.loads(json_string)

except json.JSONDecodeError as e:

print(f"Failed to parse JSON: {e}")By catching JSONDecodeError, you can guarantee that your scraper handles unexpected data gracefully without disrupting the entire workflow.

Conclusion

Mastering how to parse JSON in Python is a crucial skill for web scraping, especially when dealing with structured data from APIs like Nimble's. By integrating Nimble’s API into your scraping projects, you not only ensure efficient data collection but also enhance the reliability and speed of your scraping efforts. With Python's native JSON support, handling API responses and transforming data becomes a seamless process.

Are you ready to take your web scraping to the next level? Try Nimble's API today and unlock the full potential of public web data.

FAQ

Answers to frequently asked questions

.avif)

%20(1).webp)

.webp)