How to Use Datasets in Machine Learning Projects: Expert Tips and Resources

Learn about machine learning dataset sources, selection criteria, and preprocessing so you can have the best data for your machine learning project.

How to Use Datasets in Machine Learning Projects: Expert Tips and Resources

Learn about machine learning dataset sources, selection criteria, and preprocessing so you can have the best data for your machine learning project.

Recent advancements in machine learning (ML) have quickly revolutionized how we approach problem-solving and decision-making across nearly every industry. As the core technology that powers artificial intelligence technologies like facial recognition, large language models (LLMs), and predictive analytics, machine learning is everywhere, and both independent developers and massive companies are eager to create their own ML models.

At the heart of machine learning’s capabilities and functionality lies one critical component: data. This article will guide you on the significance of datasets in machine learning, how to find machine learning datasets, and how to prepare them for use in your machine learning project.

Introduction to Machine Learning Datasets

What is Machine Learning?

Machine learning is a subset of artificial intelligence (AI) that enables computer systems to learn and improve based on experience. The more relevant data ML systems take in, the more accurate their outputs become, because they compare new data to old data—similar to how humans learn.

Using a multi-layered learning system, machine learning models are usually used to find patterns, make predictions, and make decisions.

What Is a Machine Learning Dataset?

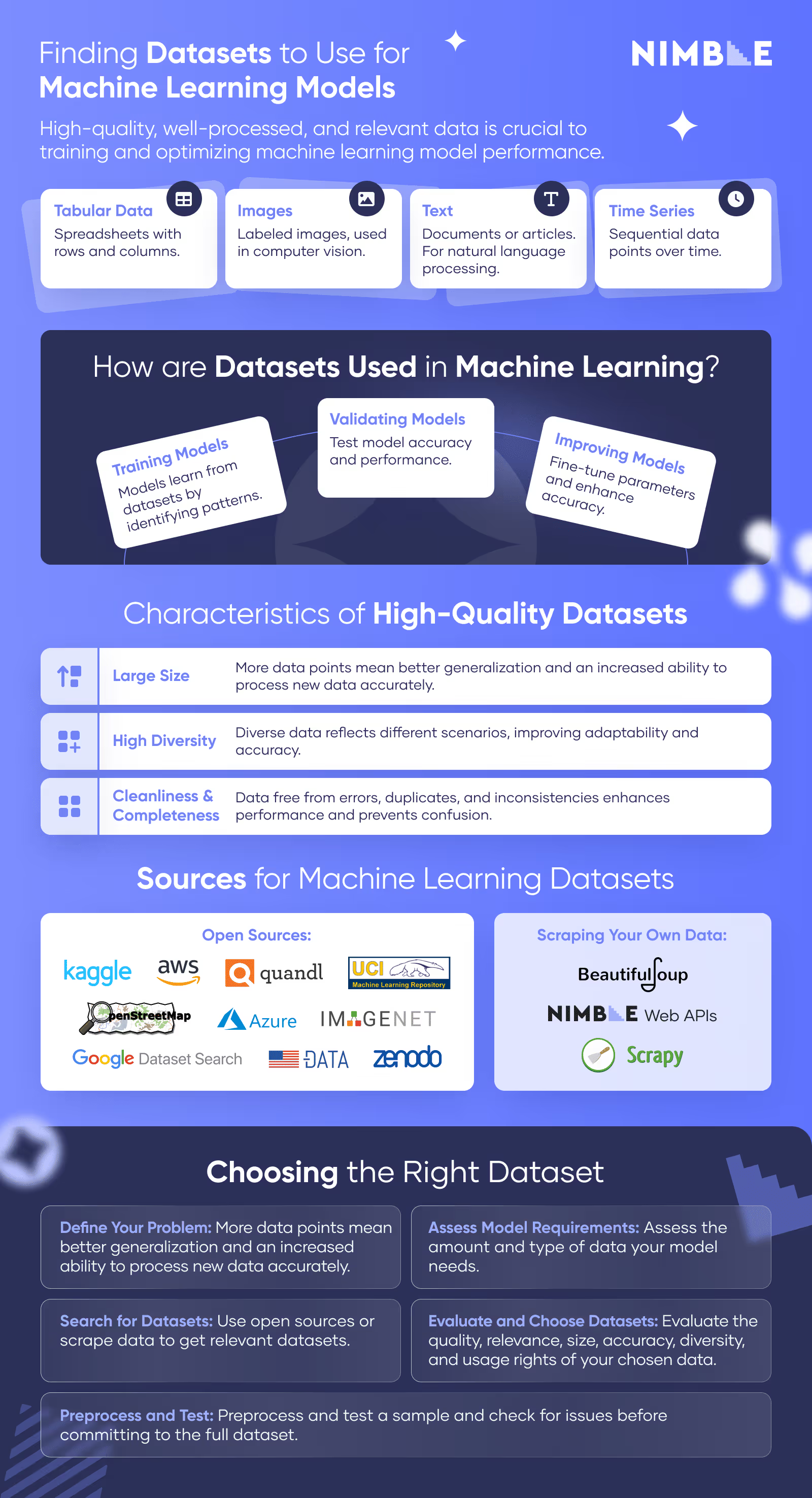

A machine learning dataset is a collection of data points used for training or analyzing the accuracy of an ML model. Many different kinds of datasets can be used, including:

- Tabular Data: Spreadsheets with rows and columns.

- Images: Collections of images (typically labeled) used in computer vision.

- Text: Documents or articles. This is especially useful for natural language processing (NLP).

- Time Series: Sequential data points over time.

The Importance of Datasets in Machine Learning

The success of a machine learning model is dependent on the quality of data it is trained on. Without high-quality datasets, even the most advanced algorithms cannot perform effectively. Datasets in machine learning are used for:

- Training Models: The ML model learns from datasets by identifying patterns.

- Validating Models: Datasets provide a benchmark to test the ML model's accuracy and performance.

- Improving Models: Continuous updates and additional data can help fine-tune ML model parameters, prevent overfitting, and enhance accuracy over time.

Characteristics of High-Quality Machine Learning Datasets

Low-quality data with missing values, outliers, inconsistencies, lack of variety, or low volume can lead to incorrect models, misleading results, wasted resources, and overfitting. The top qualities of good machine learning datasets you should look for as part of your dataset selection criteria include:

Large Size (Relative to Your Project)

Larger datasets provide a greater number of examples that will naturally include a wide variety of patterns and nuances. This helps ML models better generalize from their training data and apply their learnings to new, unseen data, which leads to higher accuracy and more reliable performance.

Although every project is different, in general, ML models need a relatively large number of data points to succeed. For example, computer vision models are often trained using ImageNet’s database of millions of labeled images. This ensures they can recognize and classify an object like a motorcycle in a wide variety of contexts—not just when the motorcycle is positioned normally in the center of the image.

High Diversity

A diverse dataset includes a wide range of examples that reflect the different scenarios the model might encounter in the real world. Implementing data diversity when ML Model training with real-world data ensures the model can handle a wide variety of inputs and scenarios, which makes it more adaptable, versatile, and accurate in the face of real-world problems. Without diversity, an ML model won’t be able to handle requests that stray too far from its training data.

For example, in natural language processing (NLP), a dataset with text from various genres, sources, and contexts helps the model understand and process different linguistic structures, styles, and vocabularies. This ensures it can handle requests from users who come from different backgrounds and have different language quirks like accents, dialects, or grammar and spelling differences.

However, too much diversity can also hurt your data, as it prevents ML models from finding the patterns they need to make accurate predictions. An NLP model built to understand English shouldn’t be fed Hindi or Spanish, or else it may produce outputs that blend features from the different languages.

Cleanliness & Completeness

Clean and complete data is free from errors, duplicates, inconsistencies, missing data, and other data “noise” that could confuse or misdirect a machine learning model. Clean data leads to better performance and reliability because it minimizes the amount of incorrect or misleading information the ML model learns from.

For instance, if you are building a model to predict customer churn in a subscription-based service, clean and complete training data would include past customer activity data with proper timestamps. It also shouldn’t include extra irrelevant data like website traffic statistics or unrelated customer demographics, duplicate data points, or temporal inconsistencies, like only one activity record for June 2023 but 5,000 activity records for July 2023.

Normally, data doesn’t arrive clean and complete—you need to clean it yourself in a process called data preprocessing (we’ll cover that in a second). However, you should try to find a dataset that is as clean as possible from the get-go to make the process easier.

How to Find Machine Learning Datasets

Finding a machine learning dataset involves choosing between two options: using an open dataset from a repository, or scraping it on your own. Sometimes, you may need to combine the two.

Open Dataset for Machine Learning Sources

Here are several platforms and sources that offer a wide range of open datasets for machine learning:

- Kaggle: A platform with diverse datasets across different domains like healthcare, finance, and the social sciences. It’s extremely popular with data scientists and machine learning engineers.

- ImageNet: A database of labeled images frequently used for computer vision and image classification projects.

- UCI Machine Learning Repository: Well-documented, academically-used datasets for tasks like classification, regression, and clustering.

- Google Dataset Search: A search engine that aggregates and lets you search for datasets across the web.

- Amazon Web Services (AWS) Public Datasets: Open datasets covering various fields like genomics and satellite imagery. AWS also offers tools and services to help you analyze and process your data.

- Microsoft Azure Open Datasets: Curated open datasets optimized for machine learning. Topics covered include demographics, weather, and public safety.

- Data.gov: Thousands of datasets generated by the U.S. government, spanning topics like agriculture, climate, health, and education. Other countries and organizations like the UK, Canada, the EU, the UN, and the World Bank, have similar websites: see a full list here.

- Quandl: Financial and economic data like stock market data and macroeconomic indicators. Unlike many other free sources on this list, some of Quandl’s data is only available with a paid subscription.

- Zenodo: A repository that allows scientific researchers to upload data in any format. It provides open-access datasets across scientific and academic disciplines.

- OpenStreetMap: Editable geographic data for applications in navigation, urban planning, and location-based services.

Scraping Your Own Data

If you need highly specific data that isn’t available publicly, like consumer sentiment on a certain product, you may need to scrape it yourself and compile your own datasets.

In this case, tools like Beautiful Soup and Scrapy have traditionally been the scraping go-tos, but they can be cumbersome, error-prone, and time-consuming. We recommend trying Nimble’s Web APIs to streamline your data scraping process.

Choosing the Right Dataset for Your Project

Selecting the appropriate dataset involves several steps:

Step 1: Define Your Problem and Objectives

Clearly articulate the problem you are trying to solve and the expected outcome. Narrow it down as specifically as possible, and think in terms of what kind of data it would require. For example, in a sentiment analysis project, your goal might be to classify text as positive or negative.

Step 2: Assess Your Model Requirements

Assess the amount of data required and the specific features needed for your model. For example, a complex deep learning model will need more data compared to a simple regression model.

Step 3: Determine Data Requirements

Identify the type of data needed (structured, unstructured, time series, etc.) and the required features based on the problem you’re trying to solve and the requirements of your model.

Step 4: Search for Datasets

Use the platforms mentioned above to find potential datasets that meet your needs, or scrape your own data.

Step 5: Evaluate and Select Datasets

Assess each AI dataset’s quality, relevancy, size, accuracy, completeness, cleanliness, and diversity. You should also check a dataset’s usage rights to ensure you have the right to use it for your project. Pick a dataset that meets your requirements as closely as possible.

Step 6: Preprocess and Test

Preprocess a sample of the dataset and run initial tests to ensure it performs as expected. Try to identify any potential issues early on before committing to the entire dataset.

Data Preprocessing Techniques for Machine Learning Datasets

Preprocessing is crucial for ensuring your dataset does its job of teaching your ML model how to spot and analyze the patterns it needs to make predictions.

What is Data Preprocessing and Why Do I Need It?

Data preprocessing for machine learning is the process of preparing raw data so that it’s usable and understandable to an ML model. It is essential because real-world data is often messy, error-filled, misleading, and in formats ML models can’t read, so it needs to be processed before being used.

The data preprocessing steps you take can vary depending on how clean and complete your raw data is. In general, it may include data cleaning, integration, reduction, and transformation.

Steps to Preprocess Your Data for Machine Learning

The following is a quick summary of basic steps for preprocessing your machine learning data.

1. Data Cleaning

Data cleaning involves handling missing data, correcting errors, and removing duplicates to ensure the dataset is accurate and reliable.

- Remove or Fill Missing Values: Handle missing data points by either removing them or filling them with appropriate values.

- Correct Errors: Identify and correct errors or inconsistencies in the dataset, such as incorrect formats or outliers.

- Eliminate Duplicates: Remove duplicate records to ensure the dataset contains unique entries.

2. Data Integration

Data integration involves combining data from different sources into a coherent dataset.

- Combine Datasets: Merge or join datasets from different sources to create a comprehensive dataset.

- Resolve Data Conflicts: Address conflicts in data values and ensure consistency across datasets.

3. Data Reduction

Data reduction aims to reduce the volume of data while maintaining its integrity. This can improve efficiency and reduce the computational cost.

- Feature Selection: Identify and select the most relevant features for model training to improve efficiency and reduce overfitting.

- Dimensionality Reduction: Use techniques like PCA to reduce the number of features while preserving essential information.

4. Data Transformation

Data transformation involves converting data into formats suitable for machine learning models, such as scaling numerical values or encoding categorical variables.

- Scaling Numerical Values: Normalize or standardize numerical features to ensure they are on a similar scale.

- Encoding Categorical Variables: Convert categorical data into numerical format using techniques like one-hot encoding.

- Feature Engineering: Develop new features that can help the model learn better and capture underlying patterns in the data.

Conclusion: Using High-Quality, Properly-Processed Machine Learning Datasets Is a Must

In summary, machine learning models rely on high-quality, properly processed, and appropriate data to optimize performance. Choosing and processing data can be a time-consuming process, but hopefully, these tips and steps can make it a bit easier.

If you want to get the latest updates on AI machine learning and how to acquire and use data to train machine learning models, follow Nimble CEO Uri Knorovich on LinkedIn.

→ Related content

- http://nimbleway-com-export.s100.upress.link/blog/web-scraping/what-are-datasets/

- http://nimbleway-com-export.s100.upress.link/blog/web-scraping/dataset-vs-database/

- http://nimbleway-com-export.s100.upress.link/blog/web-scraping-guide-2024/crawling-collecting-data-in-2024/

- http://nimbleway-com-export.s100.upress.link/nimble-api/web/

- http://docs.nimbleway-com-export.s100.upress.link/data-platform/web-api

FAQ

Answers to frequently asked questions

.png)